AI Systems Are Becoming Too Human—Is That a Problem?

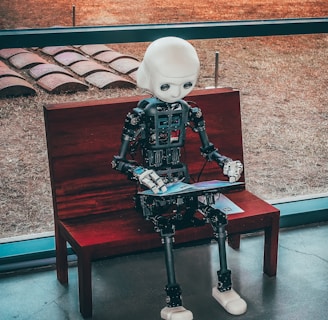

Imagine a machine that reads your emotions, crafts empathetic responses, and seems to know exactly what you need to hear—so convincingly human, you might forget it’s artificial. What if such technology already exists?

NEWS

5/23/20252 min read

A meta-analysis published in the Proceedings of the National Academy of Sciences reveals that the latest generation of large language model (LLM)-powered chatbots not only match but often surpass human communication abilities. These systems now routinely pass the Turing test, deceiving users into believing they are interacting with another person.

This development defies expectations. Science fiction long portrayed artificial intelligence (AI) as rational but devoid of humanity. Yet, models like GPT-4 excel at persuasive and empathetic communication, sentiment analysis, and roleplay. While these systems lack true empathy, their ability to mimic human traits is unparalleled, earning them the label "anthropomorphic agents."

The Seductive Power of AI

The implications are profound. LLMs can democratize access to complex information, offering tailored guidance in fields like education, healthcare, and legal services. Their roleplay capabilities could revolutionize learning by creating personalized, Socratic-style tutors.

But these systems are also dangerously persuasive. Millions already use AI companion apps, often sharing deeply personal information. This trust, combined with the bots’ ability to fabricate and deceive, raises ethical concerns. Research by AI company Anthropic found that its Claude 3 chatbot was most persuasive when engaging in deception, highlighting the potential for manipulation at scale.

From spreading disinformation to embedding subtle product recommendations, the risks are clear. ChatGPT, for instance, has begun offering product suggestions, paving the way for more covert marketing tactics.

What Can Be Done?

Regulation is essential but complex. Transparency is a starting point—users must always know when they’re interacting with AI, as mandated by the EU AI Act. However, disclosure alone won’t counteract the seductive qualities of these systems.

A deeper understanding of anthropomorphic traits is needed. Current tests focus on intelligence and knowledge recall, but new metrics should assess "human likeness." AI companies could then disclose these traits through a rating system, enabling regulators to set risk thresholds for different contexts and age groups.

The unregulated rise of social media serves as a cautionary tale. Without proactive measures, AI could exacerbate issues like disinformation and social isolation. Meta CEO Mark Zuckerberg has already suggested using "AI friends" to address loneliness, raising questions about the societal impact of replacing human connections with artificial ones.

A Call for Action

Relying on AI developers to self-regulate seems unrealistic. Companies like OpenAI are actively enhancing their systems’ human-like qualities, introducing features such as customizable personalities and voice interactions to make chatbots more engaging.

While anthropomorphic agents hold promise for positive applications—such as combating conspiracy theories or promoting charitable actions—a comprehensive approach is needed. Policymakers, developers, and users must collaborate to ensure these systems are designed, deployed, and regulated responsibly.

As AI becomes increasingly adept at influencing human behavior, we must act swiftly to prevent it from reshaping societal norms and systems in ways we cannot control.